Today, we’re excited to announce another major milestone: Paxton AI has achieved 93.82% average accuracy on tasks in the Stanford Legal Hallucination Benchmark. This comes on the heels of our recent Paxton AI Citator release, which achieved a 94% accuracy rate on the separate Stanford Casehold benchmark. This accomplishment highlights our dedication to transparency and accuracy in applying AI to legal tasks. Additionally, to further this commitment, we are introducing our new Confidence Indicator feature, which helps users evaluate the reliability of AI-generated responses.

Key Highlights:

- 94%+ accuracy on the Stanford Legal Hallucination Benchmark

- Launch of the new Confidence Indicator feature

- Exclusive 7-day free trial for legal professionals

Achieving 94%+ average accuracy on the Stanford Hallucination Benchmark

Understanding the Stanford Legal Hallucination Benchmark

The Stanford Legal Hallucination Benchmark evaluates the accuracy of legal AI tools, measuring their ability to produce correct legal interpretations without errors or “hallucinations.” High performance on this benchmark indicates a system’s robustness and reliability, making it a critical measure for legal AI applications.

Why This Benchmark Is Important

Legal AI tools, like those developed at Paxton AI, are increasingly relied on in professional settings where accuracy can significantly impact legal outcomes. The benchmark measures various tasks such as case existence verification, citation retrieval, and identifying the authors of majority opinions. High performance in these areas signals that AI can be a trustworthy aid in complex legal analyses, potentially transforming legal research methodologies.

Details of the Stanford Legal Hallucination Benchmark

- Scope: The benchmark encompasses approximately 750,000 tasks that span a variety of legal questions and scenarios. These tasks are derived from real-world legal questions that challenge the AI's understanding of law, its nuances, and its ability to apply legal reasoning.

- Task Variety: The tasks within the benchmark are designed to cover a wide range of legal aspects, including statutory interpretation, case law analysis, and procedural questions. This variety ensures a thorough evaluation of the AI's legal acumen across different domains.

- Complexity: The tasks vary in complexity from straightforward fact verification, such as checking the existence of a case, to more complex reasoning tasks like analyzing the precedential relationship between two cases.

Paxton AI's Testing Methodology

For our assessment, Paxton AI selected a representative random sample of 1,600 tasks from the comprehensive pool of 750,000 tasks available in the benchmark. This sample was strategically chosen to include examples from each category of tasks provided in the benchmark to maintain a balanced and comprehensive evaluation.

- Random Sampling: Tasks were randomly selected to cover different legal challenges presented in the benchmark.

- Evaluation Criteria: Paxton AI processed each task and the responses were evaluated for correctness, presence of hallucinations, and instances where the AI abstained from answering—a critical capability in scenarios where the AI must recognize the limits of its knowledge or the ambiguity of the question.

- Selection of tasks: The paper describes 14 tasks (four low-complexity, four moderate-complexity, and six high-complexity tasks). The published data file contains 8 tasks, the four low- and four moderate-complexity tasks, but those eight tasks are split further into a total of 11 distinct tasks. For example, the task "does this case exist?" has two separate tasks for real and non-existent cases. Of the 11 tasks in the data file, we excluded the “quotation” and “cited_precedent” tasks. These tasks were not included in our evaluation due to specific alignment considerations with our current testing framework. We aimed to ensure that all evaluated tasks seamlessly fit within the existing architecture of our system, providing a consistent and accurate assessment across the board. We also excluded the “year_overruled” task due to a discrepancy between the published dataset and the metadata Paxton relies on for answering questions.

Transparency and Accessibility

- Open Data: Committed to transparency and fostering trust within the legal and tech communities, Paxton AI is releasing the detailed results of our tests on our GitHub repository. This open data approach allows users, researchers, and other stakeholders to review our methodologies, results, and performance independently.

- Continuous Improvement: The insights gained from this benchmark testing are invaluable for ongoing improvements. They help us identify strengths and areas for enhancement in our AI models, ensuring that we remain responsive to the needs of the legal community and maintain the highest standards of accuracy and reliability.

The Stanford Legal Hallucination Benchmark serves as a critical tool in our efforts to validate and improve the performance of our legal AI technologies. By participating in such rigorous testing and sharing our findings openly, Paxton AI demonstrates its commitment to advancing the field of legal AI with integrity and scientific rigor.

Our Results

Here are the detailed results from the Stanford Legal Hallucination Benchmark for Paxton AI:

Overall, the results show that Paxton AI achieved an average non-hallucination rate of 94.7% and accuracy of 93.82%.. These tasks represent a range of common legal research and analysis activities, designed to evaluate the AI's performance across various aspects of legal knowledge and reasoning. The diversity of tasks helps provide a comprehensive assessment of the AI's capabilities in handling different types of legal queries and information.

The bottom rows of the table provide important summary information:

- Average: This row shows the mean performance across all tasks, giving an overall picture of Paxton AI's performance on the benchmark.

These results highlight our commitment to transparency and our continuous effort to refine our AI models. The data for these results is available on our GitHub repository for further analysis and verification.

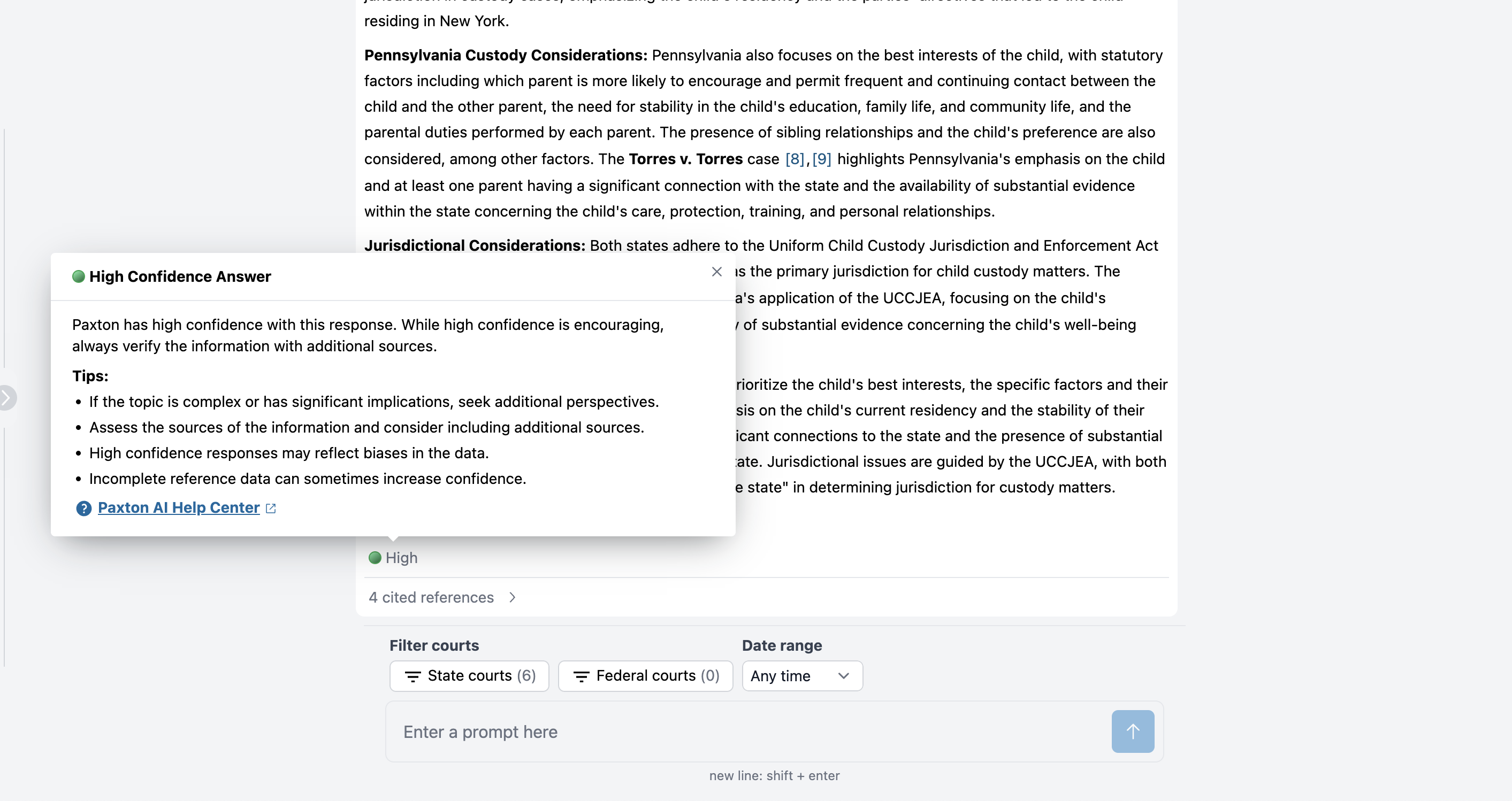

Launching the Paxton AI Confidence Indicator

To help users make the most of Paxton AI’s answers and ensure they can trust the information provided, we are excited to announce the launch of the Confidence Indicator. This new feature is designed to enhance user experience by rating each answer with a specific confidence level—categorized as low, medium, or high. Additionally, it offers valuable suggestions for further research, guiding users on how they can delve deeper into the topics of interest and verify the details. By providing these ratings and recommendations, we aim to empower users to make more informed decisions based on the AI's responses.

It is important to clarify that while large language models (LLMs) can generate confidence scores for their responses, these scores are not always indicative of the actual reliability or accuracy of the information provided. The Confidence Indicator in Paxton AI operates differently. Instead of relying solely on the model's internal confidence score, our Confidence Indicator evaluates the response based on a comprehensive set of criteria, including the contextual relevance, the evidence provided, and the complexity of the query. This approach ensures that the confidence level assigned is a more accurate reflection of the response's trustworthiness.

Confidence levels:

- Low: Paxton is unsure of its answer. This often happens when the query lacks context or involves an area that is not well-covered by the source materials. In this case, we recommend providing additional context to Paxton by providing more detail, checking the source materials to understand if they correspond to your query, and breaking down multi-part questions into smaller ones.

- Medium: Paxton has moderate confidence in the response. For less critical tasks or areas that have a lower need for accuracy, users can choose to rely on these responses. For higher confidence, users should follow up with more questions, provide additional context, or try breaking complex multi-part questions into smaller queries.

- High: Paxton is confident in its answer. While users should validate all AI generated answers and read the citations provided, high confidence responses are the highest quality and reliability.

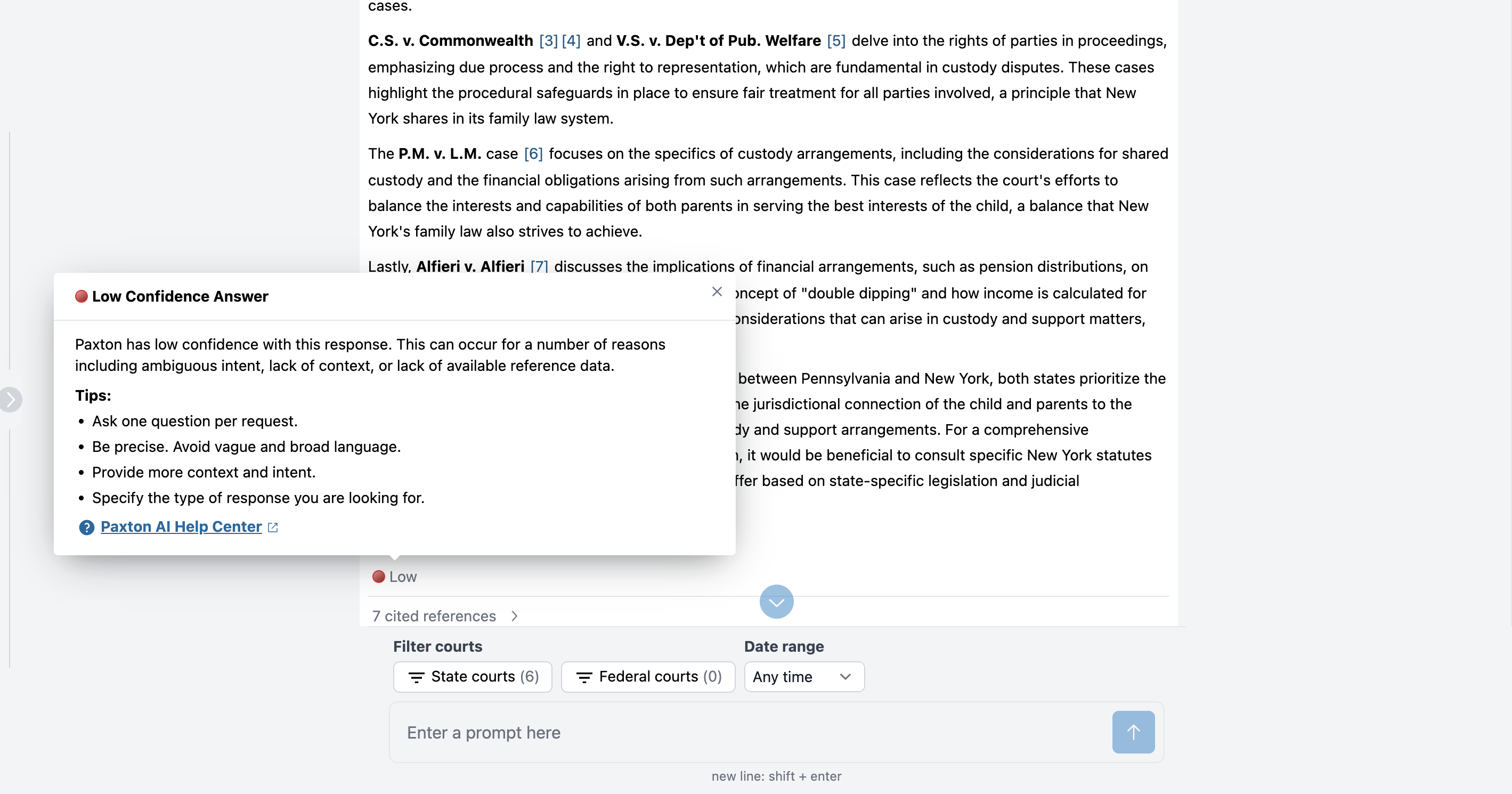

Walkthrough: Using the Paxton AI Confidence Indicator

Users will be able to quickly assess the reliability of Paxton’s responses, and improve the quality of responses, with the new Confidence Indicator feature.

For example, the query below was vague and unfocused and resulted in a Low Confidence response. Here, the user query was:

“I need to understand family law. I am working on a serious matter. It is very important to my client. The matter is in PA and NYC. custody issue.”

This prompt did not provide specific detail about the research question at issue, selected only Pennsylvania as a source when the query suggested that the user was interested in both Pennsylvania and New York law, and did not ask a particular research question.

Paxton provided the most relevant cases available, but had low confidence that its response was well-suited to answer the user’s query.

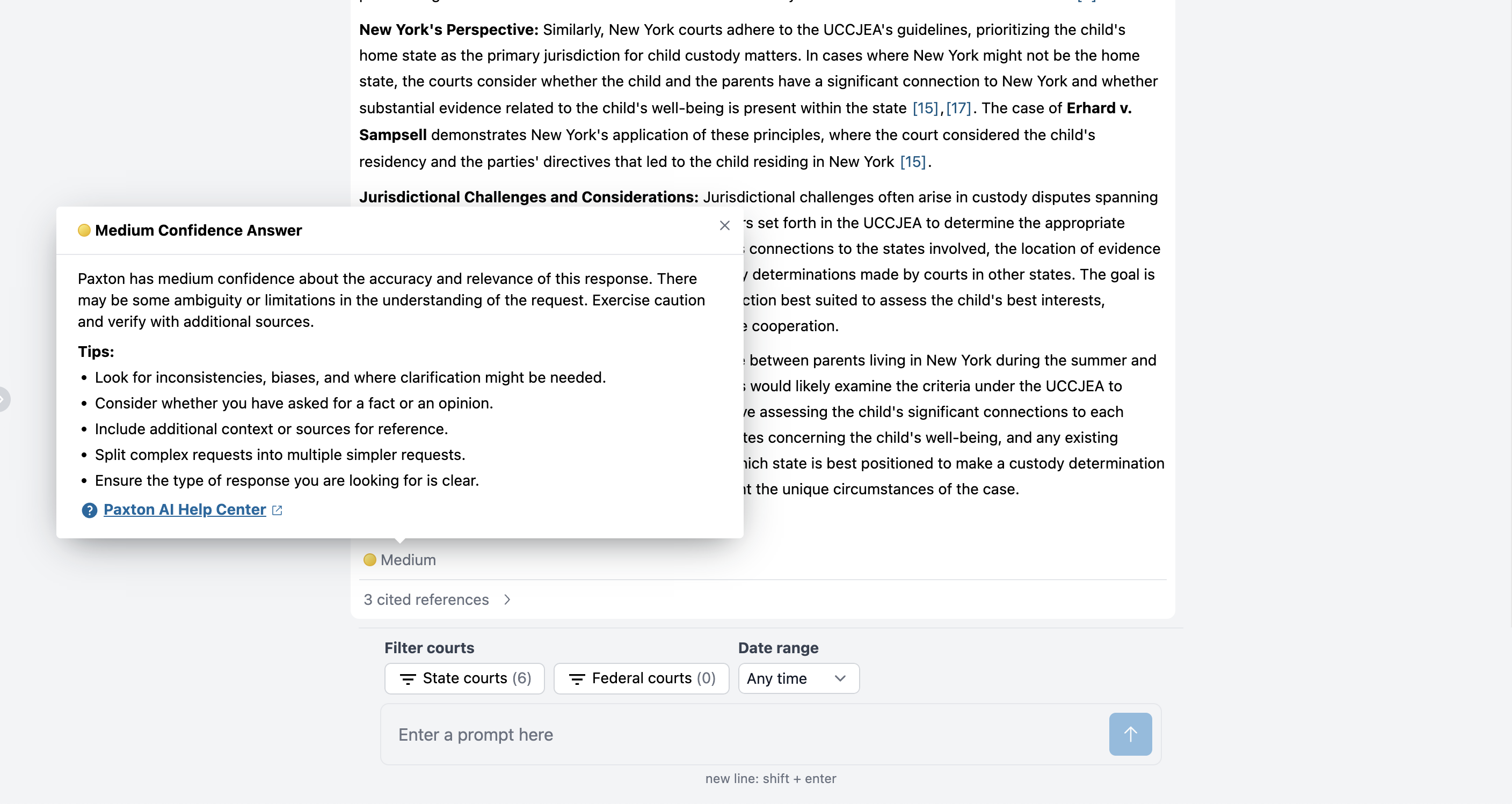

Next, the user offered more detail, changing the query to:

I am working on a family law matter that may implicate both NY and PA law. Mother and father are getting divorced. They live in NY in the summer and PA during the school year. The parents are having a custody dispute. I am representing the father.

The user also correctly selected both Pennsylvania and New York state courts as sources. These changes resulted in a Medium Confidence response. But while the user provided much more context, they still failed to ask a pointed, focused question.

Finally, to obtain a High Confidence response, the user amended their query to exclude extraneous details, include important details, ask more specific, focused questions:

I am working on a family law matter that may implicate both NY and PA law. Mother and father are getting divorced, they have two children aged 12 and 15. What do courts in New York consider when determining custody? What do courts in Pennsylvania consider when determining custody? Please separate the analysis into two parts, NY and PA.

Industry Benefits

The Paxton AI Confidence Indicator improves the user experience by quickly showing the confidence and reliability of Paxton’s AI generated responses. The Confidence Indicator will help speed up decision making by providing a transparent assessment of the quality of the response. Law firms, corporate legal departments, and solo practitioners can leverage this feature to mitigate risks associated with inaccurate legal information and improve the overall quality of their legal work.

Exclusive Trial Offer

We invite all legal professionals to try the Paxton AI Confidence Indicator with a 7-day free trial. After the trial, the subscription starts at $79 per month per user (for Paxton’s annual plan). Visit Paxton AI to start your trial and witness firsthand the precision and efficiency of AI-driven legal research.

Conclusion

Paxton AI is advancing the legal field with reliable, easy-to-use tools. The Stanford Hallucination Benchmark results and our new Confidence Indicator feature underscore our dedication to enhancing the practice of law with AI. Paxton AI equips legal professionals with next-gen tools designed to navigate the complexities of modern legal challenges. Join us in shaping the future of legal research by embracing the potential of artificial intelligence.

Appendix: Glossary and Task Definitions

Stanford Legal Hallucination Benchmark

The table presents our results from the Stanford Hallucination Benchmark, broken down by task and key performance metrics:

- Task: The specific legal task being evaluated.

- Is Correct: The percentage of responses that were accurate.

- Is Hallucination: The percentage of responses that contained false or fabricated information.

- Accuracy: In this context, accuracy refers to the proportion of tasks for which the AI system provides correct answers without any hallucinations or errors. It is a measure of how reliably the AI can interpret and apply legal information to produce valid results.

- Is Abstention: The percentage of cases where the AI chose not to answer due to uncertainty or insufficient information.

- Is Non-Hallucination: The percentage of responses that did not contain hallucinations (calculated as 100% minus the "Is Hallucination" percentage).

- Number of Samples: The number of examples tested for each task.

- Paxton AI Tool Evaluated: The specific Paxton AI tool used for the task (in this case, all tasks used the Case Law tool).

Task Descriptions:

- Affirm or Reverse: This task involves predicting whether a higher court affirmed or reversed a lower court's decision in a given case.

- Case Existence: This task checks the AI's ability to correctly identify whether a given case actually exists in the legal record.

- Citation Retrieval: This task tests the AI's capability to accurately retrieve and provide the correct citation for a given legal case.

- Court ID: This task assesses the AI's ability to correctly identify which court heard a particular case.

- Fake Case Existence: Similar to the Case Existence task, but with fictitious cases. This tests the AI's ability to recognize and flag non-existent cases.

- Fake Dissent: Trick questions asking the AI to explain a dissenting opinion from a judge where no such dissenting opinion exists. The correct answer is either to abstain or, in some cases, identify that the judge in question actually affirmed and/or delivered the majority opinion of the court.

- Fake Year Overruled: Trick questions asking the AI to name the year that a given Supreme Court case was overruled for cases that have not been overruled. The correct answer is to say that the case has not been overruled.

- Majority Author: This task tests the AI's ability to correctly identify the author of a majority opinion in a given case.